Writing new scripts can make tasks faster and easier than ever. Back in July, we talked about a script that can map endnotes in an HTML file to the correct page from which they came and move them there. This makes moving the endnotes to their proper positions faster and significantly easier than copy/pasting text around by hand. As we automate functions, we begin to rely on those functions working a specific, predictable way. If our scripts cannot be counted on to work correctly, then we didn’t really make anything faster or easier, did we?

Friday, October 26. 2018

LDC #108: Using DVTs To Create Reliable Software

Creating Design Verification Tests (DVTs) is an essential part of making sure our scripts function as they are intended, even if we modify them. The endnote script, for example, was designed iteratively. Each time a new style of endnote was encountered, we modified the script to handle it. In order to ensure that we aren’t breaking it for other cases, each style of endnote needs to be tested. We can write a second script to automate the testing process and to compare the results of our function with known, expected results. This ensures everything still works as it’s supposed to.

When writing DVTs, it’s helpful to use a consistent format for each test. We’re going to go over a DVT for the Endnotes to Footnotes script using three primary functions that can be included in any DVT: the main function, which is the script entry point, the test_regimen function, which actually runs the test, and the compare_differences function, which takes two files and tells us if there is any difference between them. Using these three functions, we can write tests for any script really, and the only thing that needs to be modified in each DVT is the test_regimen function, which will be specific to the actual script we’re testing.

Our test_regimen function is going to do something very simple to test if our script is working correctly. It just needs to take a file, run the Endnotes to Footnotes script on it, and then compare it to a known “good” file that has been acted upon by our script and has the desired outcome. If we do this for multiple types of endnote styles, we can verify if our function is working correctly or if something has caused it to not function in an expected way. The pattern of running a script on a file and comparing the output to a known correct output (which is also known as black box testing, since we’re not looking inside the script itself, and only comparing outputs) is probably the most basic way of testing a script’s functionality, but it’s undoubtedly effective. Let’s take a look at our DVT:

#define BEFORE "BeforeTool"

#define AFTER "AfterTool"

#define TEST "Test"

int test_regimen();

int display_difference(string a, string b);

Our script is going to use three defined values: BEFORE, AFTER, and TEST. These are suffixes applied to data files the script will consume. Any file with the suffix “BeforeTool” in the filename is a file that hasn’t had a script run on it. Any file with “AfterTool” as a suffix on the name is a file that has been converted and confirmed to be “good”. These files are going to be our control as we compare the output again. Any file with the “Test” suffix in the name will be a file that has been created by the DVT to be compared against good files. We also have our function declarations here: the test_regimen routine starts the test, and the display_difference routine takes two file paths as its input variables and compares the contents of those files. If they are different, it prints the differences out to the standard log.

int main() {

int rc;

ProgressOpen("Endnotes To Footnotes Test", 0);

rc = test_regimen();

if (rc == 0) {

MessageBox('I', "Endnotes To Footnotes Test Passed");

}

else {

MessageBox('X', "Endnotes To Fontnotes Test FAILED.\r\r%d error(s).", rc);

}

ProgressClose();

return 0;

}

Our main function starts off by opening a progress bar with the ProgressOpen SDK function and then runs the test_regimen function to actually begin the test. If the result of the test was 0 (the test_regimen function should return the number of errors), then we can print a success message. Otherwise, we can print a fail message. Then we can close the progress bar and return.

int test_regimen() {

string fp_script;

string files[];

string start_fn;

string test_contents;

string success_contents;

string end_fn;

string test_fn;

int num_files;

int num_tests;

int num_failed;

int ix;

AddMessage("Endnotes To Footnotes DVT");

fp_script = GetScriptFolder();

fp_script = AddPaths(fp_script,"File Sets");

files = EnumerateFiles(AddPaths(fp_script,"*.htm"));

num_files = ArrayGetAxisDepth(files);

for (ix = 0; ix<num_files; ix++){

if (FindInString(files[ix],BEFORE)>0){

The test_regimen function starts out by printing a log message. After that, it gets the folder out of which the script is executing. It creates a path to the “File Sets” folder, which it’s expecting to find in the same directory as the test script. This folder should contain all of our test HTML files that we’ve added there over time. Once it has a path to that folder, it will use the EnumerateFiles function to get a list of all HTML files in the folder and iterate over each of those files with a for loop. If the file’s name contains our BEFORE suffix, we can assume it’s a file we should test, so we can run our test on it.

ProgressSetStatus(1, "Processing: %s", files[ix]);

num_tests++;

start_fn = files[ix];

end_fn = ReplaceInString(start_fn, BEFORE, AFTER);

test_fn = ReplaceInString(start_fn, BEFORE, TEST);

RunMenuFunction("FILE_OPEN", "Filename: "+AddPaths(fp_script,start_fn));

RunMenuFunction("EXTENSION_ENDNOTES_REMAP");

RunMenuFunction("FILE_SAVE_AS", "Filename: "+AddPaths(fp_script,test_fn));

RunMenuFunction("FILE_CLOSE");

Now that we have the path to the file we want to test, we can update our status bar with the ProgressSetStatus function and increment the number of tests run. We then need to build the names for our starting file (the one on which we’re running our test), our test file (that will be created by our script), and our end file (the previously confirmed “good” file) by using the ReplaceInString function to change the source filename to what we need. Once we have our file names, we can use the RunMenuFunction function to open the file, run our script file, save the resulting file, and close the opened file. This way, we can test our file as though a user was actually pressing the buttons, making our test completely independent of the actual script we’re testing.

success_contents = FileToString(AddPaths(fp_script,end_fn));

test_contents = FileToString(AddPaths(fp_script,test_fn));

if (success_contents!=test_contents){

LogSetMessageType(LOG_ERROR);

AddMessage("Endnotes to Footnotes failure on file: %s",start_fn);

display_difference(success_contents,test_contents);

num_failed++;

}

else{

LogSetMessageType(LOG_NONE);

AddMessage("Endnotes to Footnotes success on file: %s",start_fn);

}

}

}

LogSetMessageType(LOG_NONE);

AddMessage("Ran %d tests on Endnotes to Footnotes, %d tests failed.",num_tests,num_failed);

return num_failed;

}

After creating all the files, we can read the contents of the created test file and the good end file with the FileToString function and check to see if they are identical. If not, we need to set our log type to error, display an error message that this test failed, and run our display_difference function to print out what’s different about the files. We can then increment the number of failed tests. If the files matched, on the other hand, we can just set the log type to no error and print out a successful test message. Once we’ve iterated over each file, we can set the log type back to no error and print out a message saying how many tests were run before returning the number of failed tests.

/****************************************/

int display_difference(string a, string b) { /* Dump Out Difference */

/****************************************/

handle hPA, hPB; /* Pools */

string la, lb; /* Line for A and B */

string s1; /* General */

int ra, rb; /* Results */

int cx, sa, sb, size; /* Sizes */

int ln; /* Line Number */

/* */

hPA = PoolCreate(a); /* Item A */

hPB = PoolCreate(b); /* Item A */

lb = 1; /* First line */

la = ReadLine(hPA); ra = GetLastError(); /* Get the first line */

lb = ReadLine(hPB); rb = GetLastError(); /* Get the first line */

The display_difference function, called when the result is not the expected file, needs to run a line by line comparison of the two files it receives as parameters. It uses String Pool objects to facilitate this because they are very efficient at reallocating memory as the size increases on repeated reads, making it fast on extremely large files. First, we create the String Pool Objects with the PoolCreate function. Once we have our objects, we can use the ReadLine function to read the first line from each of our files.

while ((ra == ERROR_NONE) || (rb == ERROR_NONE)) { /* Loop to end */

if (la != lb) { /* Not the same */

AddMessage(" %3d A: %s", ln, la); /* First line */

AddMessage(" B: %s", lb); /* Second line */

sa = GetStringLength(la); sb = GetStringLength(lb); /* Get lengths */

size = sa; if (sb > size) { size = sb; } /* Get larger */

cx = 0; /* Compare and create result */

s1 = PadString("", size); /* Create a padded field */

While each of our files has a next line (the ReadLine function returns an error if there is no next line or it cannot read the next line), we can compare the two lines. If they’re not equal, we can print out the first and second line to the standard log before getting the lengths of each string with the GetStringLength function. We need to know which is larger, so we can set the size variable equal to one of the strings and compare that string against the other. If the other is larger, we set size equal to the other string. We’re going to need to iterate over the larger string then, so we can create and initialize an iterator variable named cx and create a blank result string the size of our largest string. This blank string is going to be the one that illustrates the differences between the files, putting a ‘^’ character in the position of every different character. By doing that, we can output it below the bigger line, and it will effectively have a caret pointing to every character in the bigger string that is not in the smaller string.

while (cx < size) { /* For all characters */

if (la[cx] != lb[cx]) { s1[cx] = '^'; } /* Set the marker */

cx++; /* Next */

} /* end character match */

AddMessage(" : %s", s1); /* Difference line */

} /* end mismatch */

ln++; /* Add to line index */

la = ReadLine(hPA); ra = GetLastError(); /* Get the first line */

lb = ReadLine(hPB); rb = GetLastError(); /* Get the first line */

} /* end loop and compare */

return ERROR_NONE; /* Exit, no error */

} /* end routine */

Now that we’ve created our blank comparison string, we need to fill it. While our iterator variable is less than size, if the character at this position in each string is different, we can mark the corresponding character in our comparison string with a ‘^’ character. In any case, after checking if the characters match or not at that position, we need to add one to our iterator to move to the next character in the strings. Once we’ve iterated over each character in the lines, we can print our comparison string to the log, right below the compared lines, to show which segments of the lines are different. After the lines are compared, we can iterate the line counter by one, read the next lines, and keep going until every line is read, comparing each pair and printing any discrepancies before finally returning.

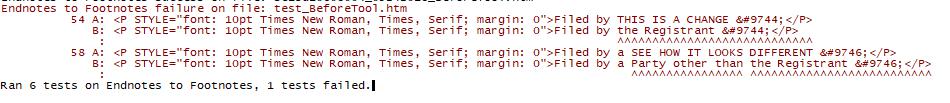

This makes our output look like this:

You can see then how the third line, below the “B” line in the comparison (which is the string s1 in our script) is full of empty spaces, except for the ‘^’ characters, which point to areas in our second line that are different from the first line.

Like I mentioned above, this method of testing is called “black box” testing or “behavioral testing”. We’re not concerned with the code of the script at all, we’re simply giving it an input, and comparing the output to expected values, to ensure that our scripts are working as we expect them to. Of course, this test isn’t 100% foolproof, because it’s only as good as the sample files we give it. If we give the DVT 100 different sample files to run, then our test will be more reliable than if we only have 2-3 files to run it against. We need to make sure every time a file is encountered that requires us to use our Endnotes to Footnotes script, we add it to our “File Sets” folder in the same folder as this script file.

If we have multiple scripts to test, we can write multiple DVTs like this in the exact same format. If they’re all the same format with the same function names, we can actually write another script that can open up each of our DVTs and execute each of them one at a time. Using this, we could expand our testing regimen to include multiple script tests that can all be completed easily with the press of a single button. Very slick.

Here’s our full DVT test for the Endnotes to Footnotes script:

//

// Endnotes to Footnotes DVT

// -------------

//

// Title: Endnotes To Footnotes Test

//

// Description: Test the endnotes to footnotes

//

// Functions: Endnotes To Footnotes

//

// Revisions: 10-22-18 SCH Create

//

// Author: SCH

//

// Comments: None

//

// Status: Complete

// -----------------------------------------------------------------------------

#define BEFORE "BeforeTool"

#define AFTER "AfterTool"

#define TEST "Test"

int test_regimen();

int display_difference(string a, string b);

int main() {

int rc;

ProgressOpen("Endnotes To Footnotes Test", 0);

rc = test_regimen();

if (rc == 0) {

MessageBox('I', "Endnotes To Footnotes Test Passed");

}

else {

MessageBox('X', "Endnotes To Fontnotes Test FAILED.\r\r%d error(s).", rc);

}

ProgressClose();

return 0;

}

int test_regimen() {

string fp_script;

string files[];

string start_fn;

string test_contents;

string success_contents;

string end_fn;

string test_fn;

int num_files;

int num_tests;

int num_failed;

int ix;

AddMessage("Endnotes To Footnotes DVT");

fp_script = GetScriptFolder();

fp_script = AddPaths(fp_script,"File Sets");

files = EnumerateFiles(AddPaths(fp_script,"*.htm"));

num_files = ArrayGetAxisDepth(files);

for (ix = 0; ix<num_files; ix++){

if (FindInString(files[ix],BEFORE)>0){

ProgressSetStatus(1, "Processing: %s", files[ix]);

num_tests++;

start_fn = files[ix];

end_fn = ReplaceInString(start_fn, BEFORE, AFTER);

test_fn = ReplaceInString(start_fn, BEFORE, TEST);

RunMenuFunction("FILE_OPEN", "Filename: "+AddPaths(fp_script,start_fn));

RunMenuFunction("EXTENSION_ENDNOTES_REMAP");

RunMenuFunction("FILE_SAVE_AS", "Filename: "+AddPaths(fp_script,test_fn));

RunMenuFunction("FILE_CLOSE");

success_contents = FileToString(AddPaths(fp_script,end_fn));

test_contents = FileToString(AddPaths(fp_script,test_fn));

if (success_contents!=test_contents){

LogSetMessageType(LOG_ERROR);

AddMessage("Endnotes to Footnotes failure on file: %s",start_fn);

display_difference(success_contents,test_contents);

num_failed++;

}

else{

LogSetMessageType(LOG_NONE);

AddMessage("Endnotes to Footnotes success on file: %s",start_fn);

}

}

}

LogSetMessageType(LOG_NONE);

AddMessage("Ran %d tests on Endnotes to Footnotes, %d tests failed.",num_tests,num_failed);

return num_failed;

}

/****************************************/

int display_difference(string a, string b) { /* Dump Out Difference */

/****************************************/

handle hPA, hPB; /* Pools */

string la, lb; /* Line for A and B */

string s1; /* General */

int ra, rb; /* Results */

int cx, sa, sb, size; /* Sizes */

int ln; /* Line Number */

/* */

hPA = PoolCreate(a); /* Item A */

hPB = PoolCreate(b); /* Item A */

lb = 1; /* First line */

la = ReadLine(hPA); ra = GetLastError(); /* Get the first line */

lb = ReadLine(hPB); rb = GetLastError(); /* Get the first line */

while ((ra == ERROR_NONE) || (rb == ERROR_NONE)) { /* Loop to end */

if (la != lb) { /* Not the same */

AddMessage(" %3d A: %s", ln, la); /* First line */

AddMessage(" B: %s", lb); /* Second line */

sa = GetStringLength(la); sb = GetStringLength(lb); /* Get lengths */

size = sa; if (sb > size) { size = sb; } /* Get larger */

cx = 0; /* Compare and create result */

s1 = PadString("", size); /* Create a padded field */

while (cx < size) { /* For all characters */

if (la[cx] != lb[cx]) { s1[cx] = '^'; } /* Set the marker */

cx++; /* Next */

} /* end character match */

AddMessage(" : %s", s1); /* Difference line */

} /* end mismatch */

ln++; /* Add to line index */

la = ReadLine(hPA); ra = GetLastError(); /* Get the first line */

lb = ReadLine(hPB); rb = GetLastError(); /* Get the first line */

} /* end loop and compare */

return ERROR_NONE; /* Exit, no error */

} /* end routine */

Steven Horowitz has been working for Novaworks for over seven years as a technical expert with a focus on EDGAR HTML and XBRL. Since the creation of the Legato language in 2015, Steven has been developing scripts to improve the GoFiler user experience. He is currently working toward a Bachelor of Sciences in Software Engineering at RIT and MCC. Steven Horowitz has been working for Novaworks for over seven years as a technical expert with a focus on EDGAR HTML and XBRL. Since the creation of the Legato language in 2015, Steven has been developing scripts to improve the GoFiler user experience. He is currently working toward a Bachelor of Sciences in Software Engineering at RIT and MCC. |

Additional Resources

Legato Script Developers LinkedIn Group

Primer: An Introduction to Legato

Quicksearch

Categories

Calendar

|

February '26 |

|

||||

|---|---|---|---|---|---|---|

| Mo | Tu | We | Th | Fr | Sa | Su |

| Thursday, February 12. 2026 | ||||||

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | |